1. 在Linux服务器上部署DeepSeek模型

要在 Linux 上通过 Ollama 安装和使用模型,您可以按照以下步骤进行操作:

步骤 1:安装 Ollama

-

安装 Ollama:

使用以下命令安装 Ollama:curl -sSfL https://ollama.com/install.sh | sh- 1

-

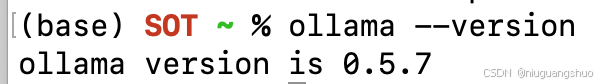

验证安装:

安装完成后,您可以通过以下命令验证 Ollama 是否安装成功:ollama --version- 1

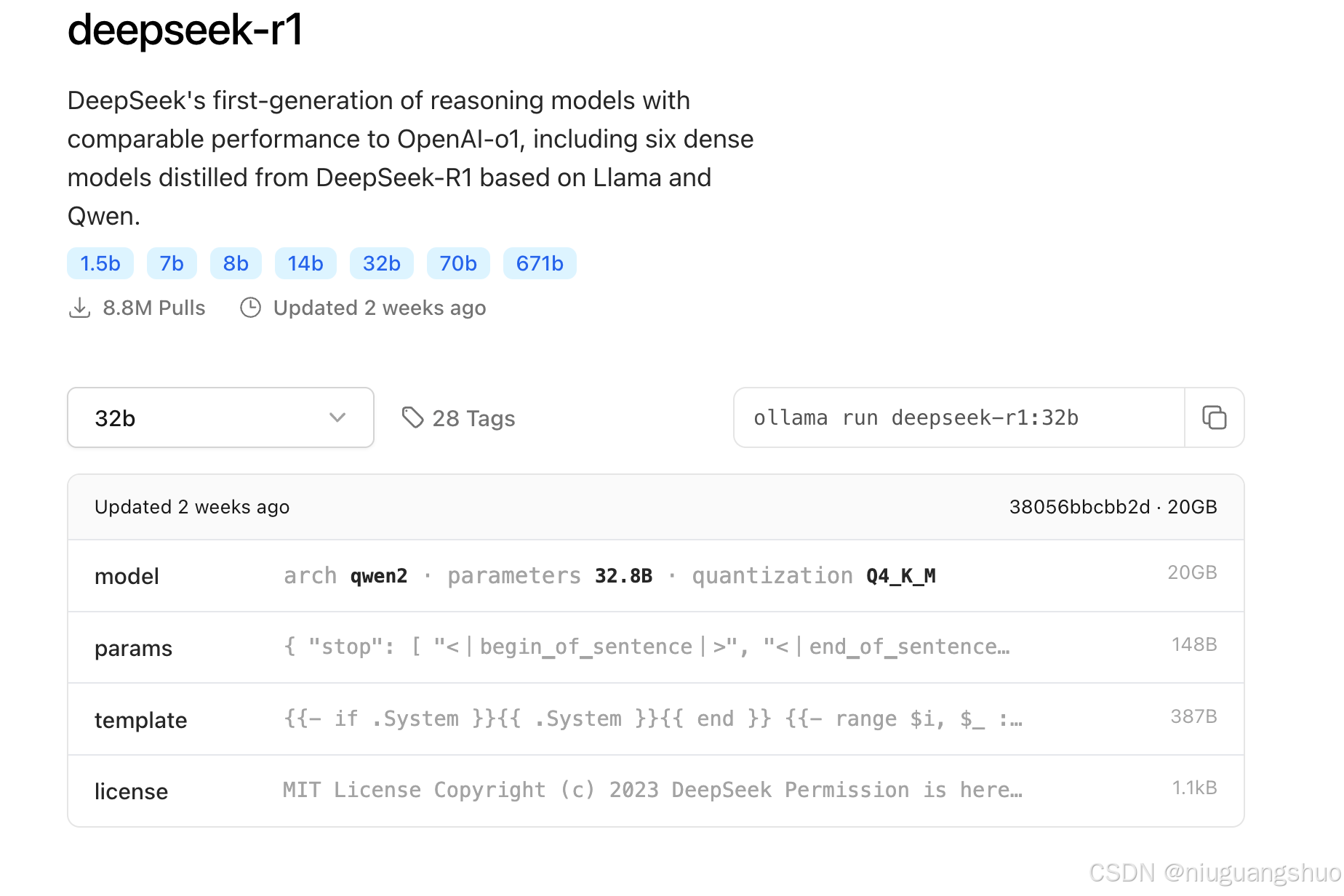

步骤 2:下载模型

ollama run deepseek-r1:32b

- 1

这将下载并启动DeepSeek R1 32B模型。

DeepSeek R1 蒸馏模型列表

| 模型名称 | 参数量 | 基础架构 | 适用场景 |

|---|---|---|---|

| DeepSeek-R1-Distill-Qwen-1.5B | 1.5B | Qwen2.5 | 适合移动设备或资源受限的终端 |

| DeepSeek-R1-Distill-Qwen-7B | 7B | Qwen2.5 | 适合普通文本生成工具 |

| DeepSeek-R1-Distill-Llama-8B | 8B | Llama3.1 | 适合小型企业日常文本处理 |

| DeepSeek-R1-Distill-Qwen-14B | 14B | Qwen2.5 | 适合桌面级应用 |

| DeepSeek-R1-Distill-Qwen-32B | 32B | Qwen2.5 | 适合专业领域知识问答系统 |

| DeepSeek-R1-Distill-Llama-70B | 70B | Llama3.3 | 适合科研、学术研究等高要求场景 |

RTX 4090 显卡显存为 24GB,32B 模型在 4-bit 量化下约需 22GB 显存,适合该硬件。32B 模型在推理基准测试中表现优异,接近 70B 模型的推理能力,但对硬件资源需求更低。

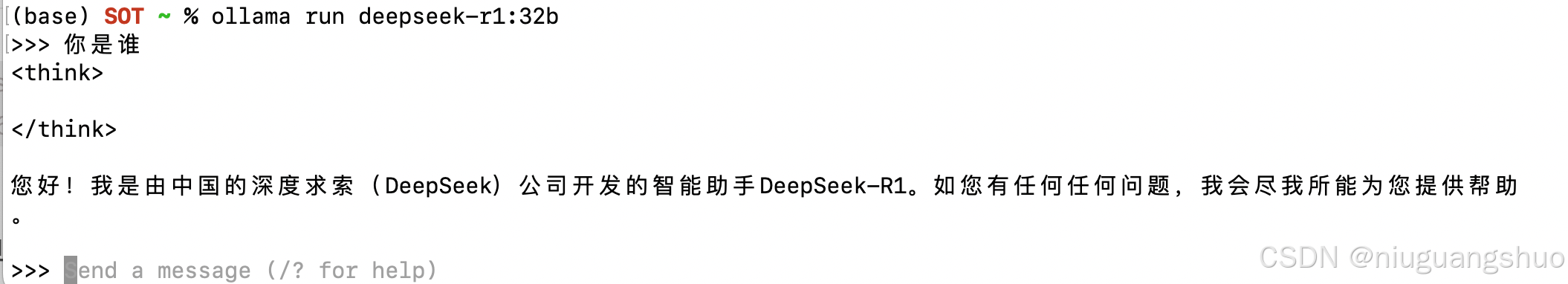

步骤 3:运行模型

ollama run deepseek-r1:32b

- 1

通过上面的步骤,已经可以直接在 Linux服务器通过命令行的形式使用Deepseek了。但是不够友好,下面介绍更方便的形式。

2. 在linux服务器配置Ollama服务

1. 设置Ollama服务配置

设置OLLAMA_HOST=0.0.0.0环境变量,这使得Ollama服务能够监听所有网络接口,从而允许远程访问。

sudo vi /etc/systemd/system/ollama.service

- 1

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="OLLAMA_HOST=0.0.0.0"

Environment="PATH=/usr/local/cuda/bin:/home/bytedance/miniconda3/bin:/home/bytedance/miniconda3/condabin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin"

[Install]

WantedBy=default.target

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

2. 重新加载并重启Ollama服务

sudo systemctl daemon-reload

sudo systemctl restart ollama

- 1

- 2

- 验证Ollama服务是否正常运行

运行以下命令,确保Ollama服务正在监听所有网络接口:

sudo netstat -tulpn | grep ollama

- 1

您应该看到类似以下的输出,表明Ollama服务正在监听所有网络接口(0.0.0.0):

tcp 0 0 0.0.0.0:11434 0.0.0.0:* LISTEN - ollama

- 1

4. 配置防火墙以允许远程访问

为了确保您的Linux服务器允许从外部访问Ollama服务,您需要配置防火墙以允许通过端口11434的流量。

sudo ufw allow 11434/tcp

sudo ufw reload

- 1

- 2

5. 验证防火墙规则

确保防火墙规则已正确添加,并且端口11434已开放。您可以使用以下命令检查防火墙状态:

sudo ufw status

- 1

状态: 激活

至 动作 来自

- -- --

22/tcp ALLOW Anywhere

11434/tcp ALLOW Anywhere

22/tcp (v6) ALLOW Anywhere (v6)

11434/tcp (v6) ALLOW Anywhere (v6)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

6. 测试远程访问

在完成上述配置后,您可以通过远程设备(如Mac)测试对Ollama服务的访问。

在远程设备上测试连接:

在Mac上打开终端,运行以下命令以测试对Ollama服务的连接:

curl http://10.37.96.186:11434/api/version

- 1

显示

{"version":"0.5.7"}

- 1

测试问答

curl -X POST http://10.37.96.186:11434/api/generate \

-H "Content-Type: application/json" \

-d '{"model": "deepseek-r1:32b", "prompt": "你是谁?"}'

- 1

- 2

- 3

显示

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.118616168Z","response":"\u003cthink\u003e","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.150938966Z","response":"\n\n","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.175255854Z","response":"\u003c/think\u003e","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.199509353Z","response":"\n\n","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.223657359Z","response":"您好","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.24788375Z","response":"!","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.272068174Z","response":"我是","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.296163417Z","response":"由","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.320515728Z","response":"中国的","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.344646528Z","response":"深度","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.36880216Z","response":"求","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.393006489Z","response":"索","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.417115966Z","response":"(","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.441321254Z","response":"Deep","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.465439117Z","response":"Seek","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.489619415Z","response":")","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.51381827Z","response":"公司","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.538012781Z","response":"开发","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.562186246Z","response":"的","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.586331325Z","response":"智能","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.610539651Z","response":"助手","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.634769989Z","response":"Deep","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.659134003Z","response":"Seek","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.683523205Z","response":"-R","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.70761762Z","response":"1","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.731953604Z","response":"。","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.756135462Z","response":"如","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.783480232Z","response":"您","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.807766337Z","response":"有任何","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.831964079Z","response":"任何","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.856229156Z","response":"问题","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.880487159Z","response":",","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.904710537Z","response":"我会","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.929026993Z","response":"尽","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.953239249Z","response":"我","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:15.977496819Z","response":"所能","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:16.001763128Z","response":"为您提供","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:16.026068523Z","response":"帮助","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:16.050242581Z","response":"。","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06T00:47:16.074454593Z","response":"","done":true,"done_reason":"stop","context":[151644,105043,100165,30,151645,151648,271,151649,198,198,111308,6313,104198,67071,105538,102217,30918,50984,9909,33464,39350,7552,73218,100013,9370,100168,110498,33464,39350,12,49,16,1773,29524,87026,110117,99885,86119,3837,105351,99739,35946,111079,113445,100364,1773],"total_duration":3872978599,"load_duration":2811407308,"prompt_eval_count":6,"prompt_eval_duration":102000000,"eval_count":40,"eval_duration":958000000}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

通过上述步骤,已经成功在Linux服务器上配置了Ollama服务,并通过Mac远程访问了DeepSeek模型。接下来,将介绍如何在Mac上安装Web UI,以便更方便地与模型进行交互。

3. 在Mac上安装Web UI

为了更方便地与远程Linux服务器上的DeepSeek模型进行交互,可以在Mac上安装一个Web UI工具。这里我们推荐使用 Open Web UI,它是一个基于Web的界面,支持多种AI模型,包括Ollama。

1. 通过conda安装open-webui

打开终端,运行以下命令创建一个新的conda环境,并指定Python版本为3.11:

conda create -n open-webui-env python=3.11

conda activate open-webui-env

pip install open-webui

- 1

- 2

- 3

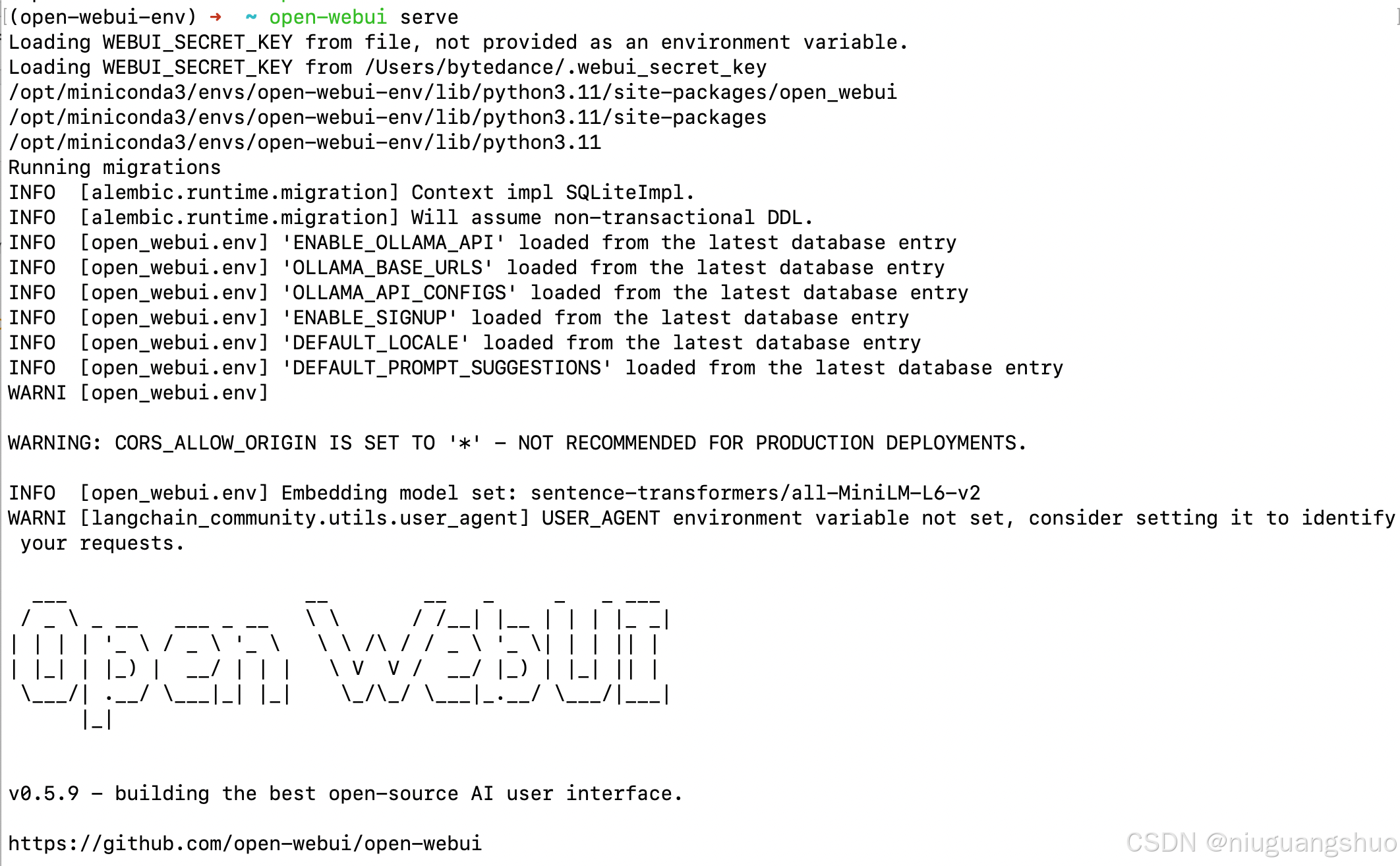

2. 启动open-webui

open-webui serve

- 1

3. 浏览器访问

http://localhost:8080/

- 1

-

使用管理员身份(第一个注册用户)登录

-

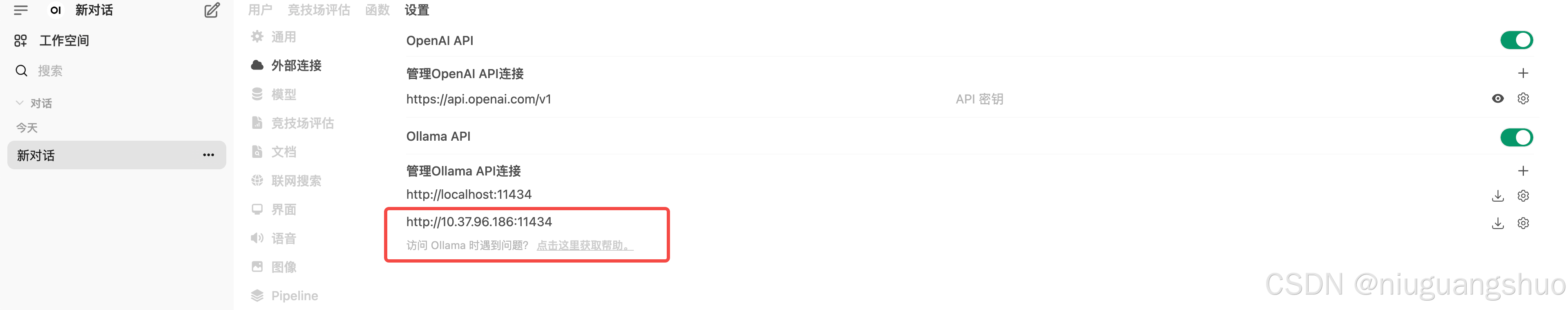

在Open webui界面中,依次点击“展开左侧栏”(左上角三道杠)–>“头像”(左下角)–>管理员面板–>设置(上侧)–>外部连接

-

在外部连接的Ollama API一栏将switch开关打开,在栏中填上http://10.37.96.186:11434(这是我的服务器地址)

-

点击右下角“保存”按钮

-

点击“新对话”(左上角),确定是否正确刷出模型列表,如果正确刷出,则设置完毕。

4. 愉快的使用本地deepseek模型

评论记录:

回复评论: