SparkTTS 的简介

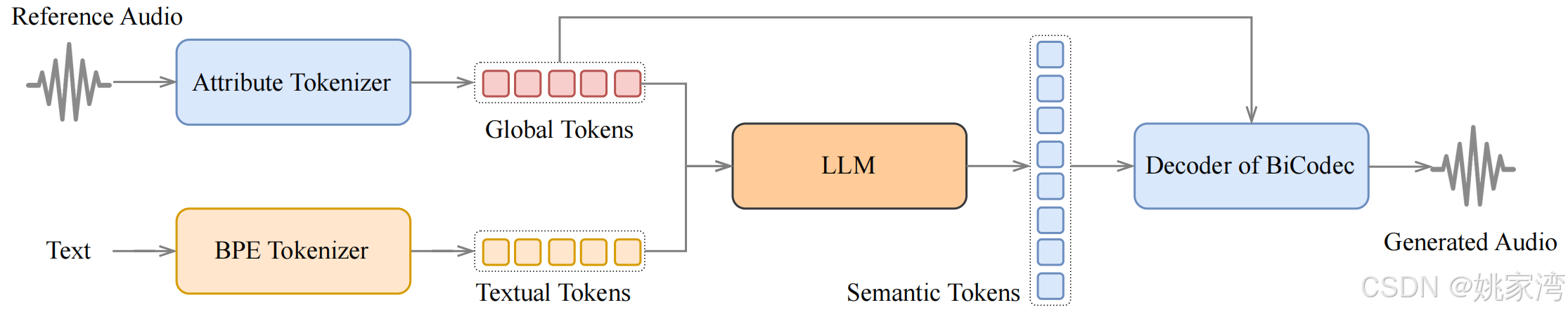

Spark-TTS是一种基于SpardAudio团队提出的 BiCodec 构建的新系统,BiCodec 是一种单流语音编解码器,可将语音策略性地分解为两种互补的标记类型:用于语言内容的低比特率语义标记和用于说话者特定属性的固定长度全局标记。这种解开的表示与 Qwen2.5 LLM 和思路链 (CoT) 生成方法相结合,既可以实现粗粒度属性控制(例如性别、音高水平),也可以实现细粒度参数调整(例如精确的音高值、语速)。

它是香港科技大学,上海交大,南洋技术大学等单位组成的团队开发的,与香港中文大学的MaskGCT 相比,SparkTTS 使用了大模型。

SparkTTS的结构

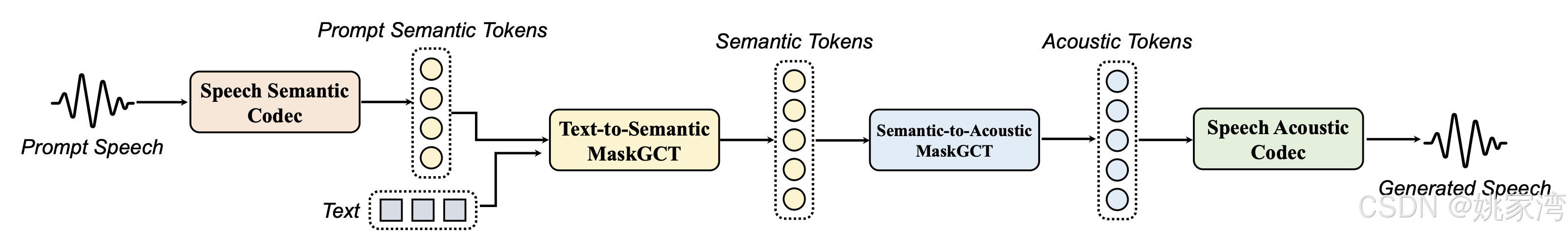

MaskGCT 结构

测试网站

你可以在下列网站做一些测试。

Spark TTS - Text-to-Speech AI Model

Windows 安装

下载 Spark-TTS

- Go to Spark-TTS GitHub

- Click "Code" > "Download ZIP", then extract it.

2. 建立 Conda 环境

- conda create -n sparktts python=3.12 -y

- conda activate sparktts

3. Install Dependencies

pip install -r requirements.txtInstall PyTorch (Auto-Detect CUDA or CPU)

我使用的是RTX4080 显卡。安装cuda 12.4,安装的PyTorch 为2.5.1+cu124。

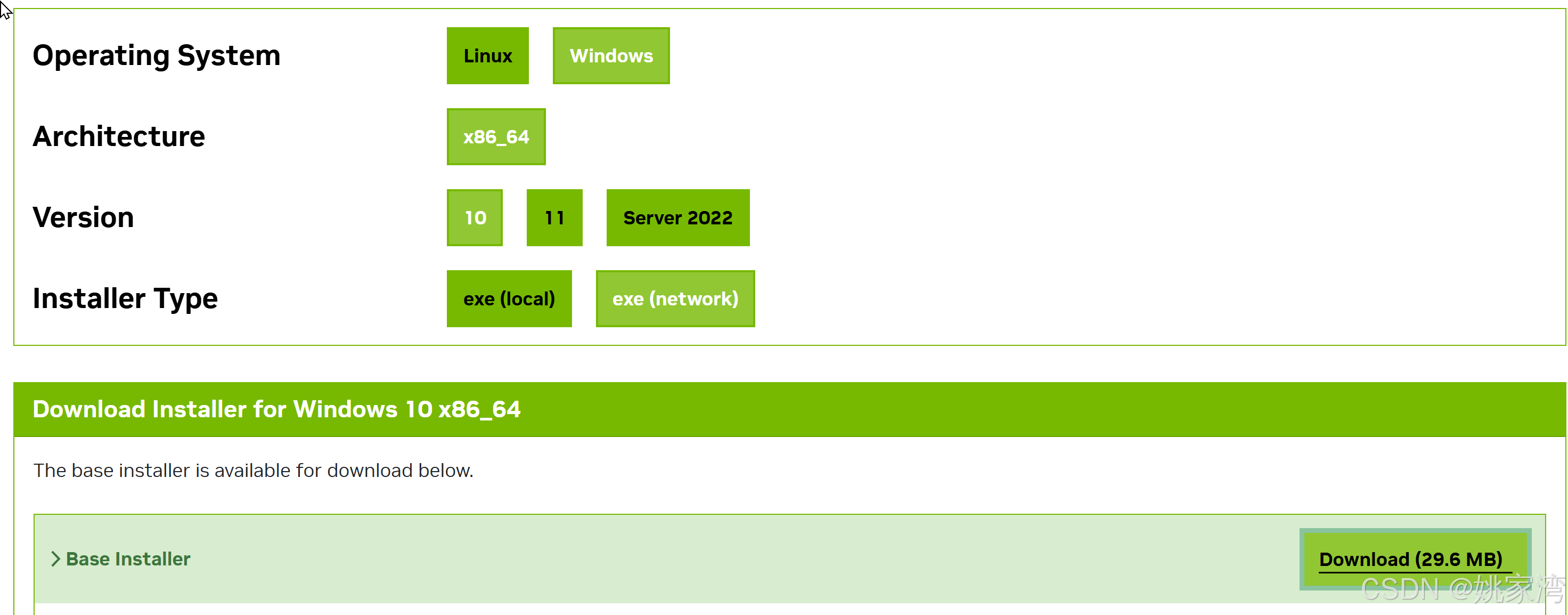

下载cuda 12.4.

安装 PyTorch +cu124

conda install pytorch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 pytorch-cuda=12.4 -c pytorch -c nvidia5. Download the Model

- mkdir pretrained_models

- git clone https://huggingface.co/SparkAudio/Spark-TTS-0.5B pretrained_models/Spark-TTS-0.5B

遇到问题

运行python webUI.py 时出现:

variable KMP_DUPLICATE_LIB_OK=TRUE to allow the program to continue to execute, but that may cause crashes or silently produce incorrect results. For more information, please see http://www.intel.com/software/products/support/.办法

1 删除 libiomp5md.dll

D:\Users\Yao\anaconda3\Library\bin\libiomp5md.dll2 设置临时环境变量:KMP_DUPLICATE_LIB_OK=TRUE

set KMP_DUPLICATE_LIB_OK=TRUE也在windows 下设置了。

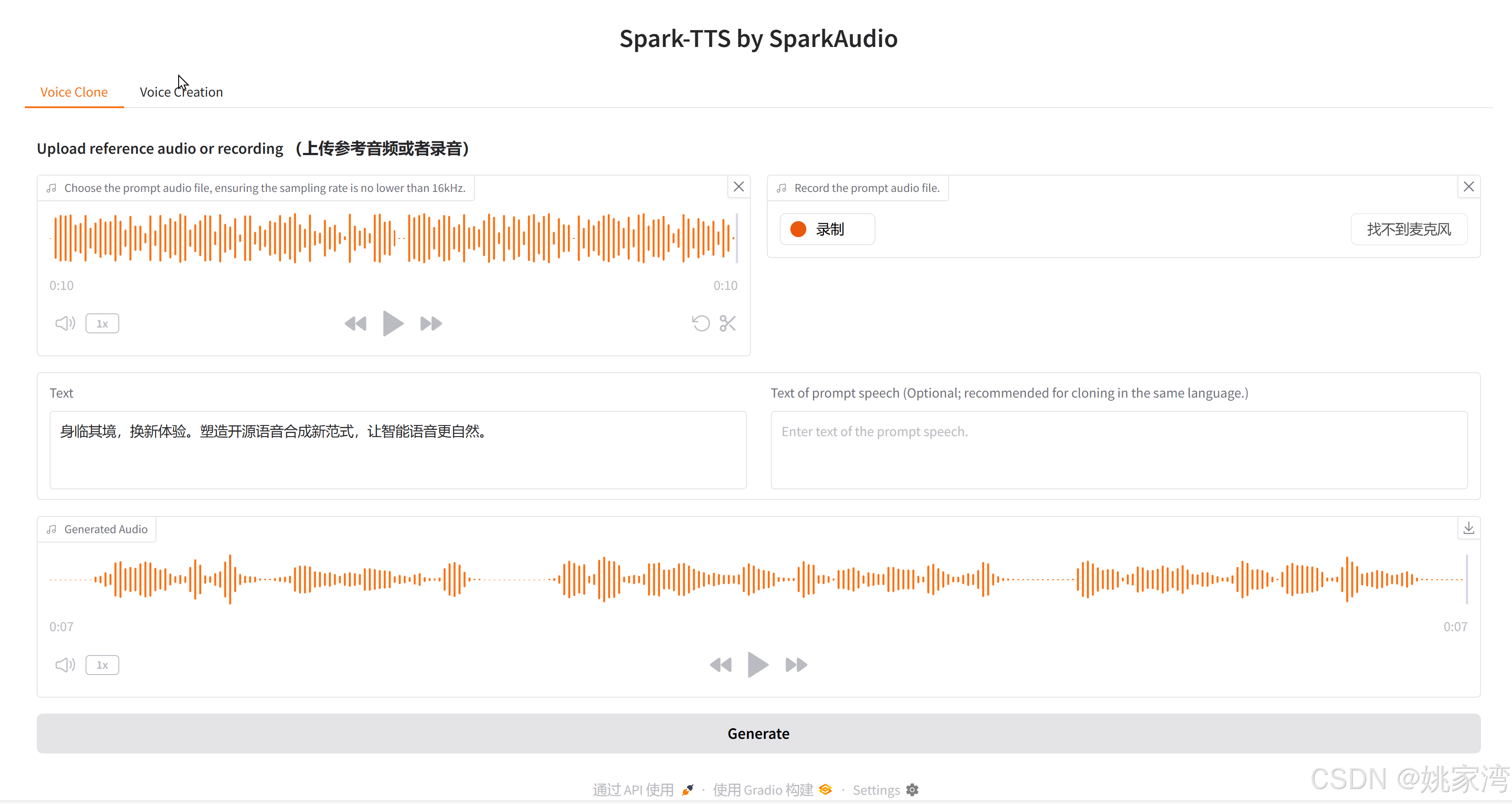

结果

效果明显比MaskGCT 好。转码速度快。

使用Python 调用SparkTTS

改写了使用python 调用SparkTTS 的方式

- from datetime import datetime

- import os

- import soundfile as sf

- import torch

- import logging

- from cli.SparkTTS import SparkTTS

- from sparktts.utils.token_parser import LEVELS_MAP_UI

- # Initialize model

-

- def initialize_model(model_dir="pretrained_models/Spark-TTS-0.5B", device=0):

- """Load the model once at the beginning."""

- logging.info(f"Loading model from: {model_dir}")

- device = torch.device(f"cuda:{device}")

- model = SparkTTS(model_dir, device)

- return model

- def run_tts(

- text,

- model,

- prompt_text=None,

- prompt_speech=None,

- gender=None,

- pitch=None,

- speed=None,

- save_dir="example/results",

- ):

- """Perform TTS inference and save the generated audio."""

- logging.info(f"Saving audio to: {save_dir}")

-

- if prompt_text is not None:

- prompt_text = None if len(prompt_text) <= 1 else prompt_text

-

- # Ensure the save directory exists

- os.makedirs(save_dir, exist_ok=True)

-

- # Generate unique filename using timestamp

- timestamp = datetime.now().strftime("%Y%m%d%H%M%S")

- save_path = os.path.join(save_dir, f"{timestamp}.wav")

-

- logging.info("Starting inference...")

-

- # Perform inference and save the output audio

- with torch.no_grad():

- wav = model.inference(

- text,

- prompt_speech,

- prompt_text,

- gender,

- pitch,

- speed,

- )

-

- sf.write(save_path, wav, samplerate=16000)

-

- logging.info(f"Audio saved at: {save_path}")

-

- return save_path

-

- # Define callback function for voice cloning

- def voice_clone(text, prompt_text, prompt_wav_upload, prompt_wav_record):

- """

- Gradio callback to clone voice using text and optional prompt speech.

- - text: The input text to be synthesised.

- - prompt_text: Additional textual info for the prompt (optional).

- - prompt_wav_upload/prompt_wav_record: Audio files used as reference.

- """

- prompt_speech = prompt_wav_upload if prompt_wav_upload else prompt_wav_record

- prompt_text_clean = None if len(prompt_text) < 2 else prompt_text

-

- audio_output_path = run_tts(

- text,

- model,

- prompt_text=prompt_text_clean,

- prompt_speech=prompt_speech

- )

- return audio_output_path

-

- # Define callback function for creating new voices

- def voice_creation(text, gender, pitch, speed):

- """

- Gradio callback to create a synthetic voice with adjustable parameters.

- - text: The input text for synthesis.

- - gender: 'male' or 'female'.

- - pitch/speed: Ranges mapped by LEVELS_MAP_UI.

- """

- pitch_val = LEVELS_MAP_UI[int(pitch)]

- speed_val = LEVELS_MAP_UI[int(speed)]

- audio_output_path = run_tts(

- text,

- model,

- gender=gender,

- pitch=pitch_val,

- speed=speed_val

- )

- return audio_output_path

- #

-

- model_dir="pretrained_models/Spark-TTS-0.5B"

- device=0

- model = initialize_model(model_dir, device=device)

- text="仅仅懂得应用科学本身是不够的!对人类本身及其命运的关心必然总是培养出努力学习各种技术的兴趣;对尚未解决的物质起源和商品分配的问题的关心——为了我们思想意识的建立,将会给整个人类带来幸福而不是灾难。"

- #prompt_wav_upload="E:\yao2025\Spark-TTS-main\src\demos\鲁豫\luyu_zh.wav"

- prompt_wav_upload="E:\yao2025\yaoaudio.wav"

- prompt_text="朋友们,今天我要对你们说,尽管眼下困难重重,但我依然怀有一个梦。这个梦深深植根于美国梦之中。我梦想有一天,这个国家将会奋起,实现其立国信条的真谛,我们认为这些真理不言而喻:人人生而平等。我梦想有一天,在佐治亚洲的红色山岗上,昔日奴隶的儿子能够同昔日奴隶主的儿子同席而坐,亲如手足。"

- prompt_wav_record=None

- print("TTS ....")

- audio_output_path=voice_clone(text, prompt_text, prompt_wav_upload, prompt_wav_record)

- """

- pitch,音调

- speed 速度

- 通过下面的map

- LEVELS_MAP_UI = {

- 1: 'very_low',

- 2: 'low',

- 3: 'moderate',

- 4: 'high',

- 5: 'very_high'

- }

- """

- #audio_output_path=voice_creation(text,"female","5","5")

- print(audio_output_path)

-

评论记录:

回复评论: